(source: http://autoescuelapf.com/images/test 2.jpg)

(source: http://autoescuelapf.com/images/test 2.jpg)

Why do we deal with on-line test development?

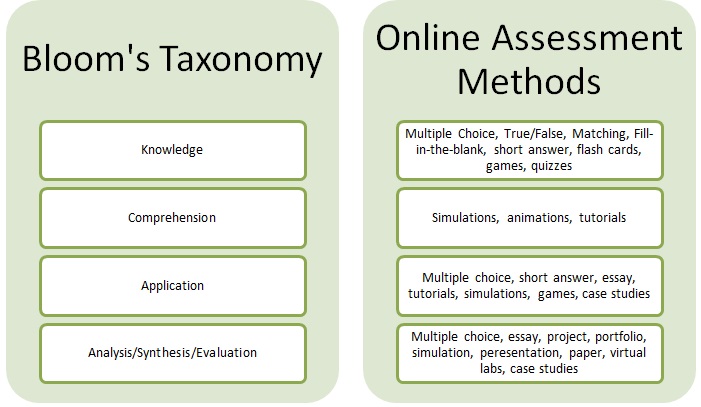

source: http://sites.psu.edu/onlineassessment/wp-content/uploads/sites/3535/2013/04/bloom-MATCH.jpg

Teachers are using tests in almost all fields of education, for a long time, surely after the second word war, but in the last 50 years testing has reached even the most remote areas, all countries and is globally used in formal, informal settings in general, as well as in higher and vocational education.

But does it mean that we are prepared to design and use tests even in on-line settings? While we design and use tests we often fail to concentrate on the most basic rules of design.We struggle with the ever growing experience of assessment, with more and more intelligent applications andthe similarly growing number of different test types and assessment settings and possibilities. This unit summarises the most important, a.k.a. „golden” rules of test making and tries to offer an easy structure to choose and design an appropriate on-line test either from scratch or by adapting an already used paper-based tests for re-development.

What is an „on-line test”?

In our context a test is a type of assessment in the educational field. There are many other tests, like psychology tests, sport tests, medical tests, employability tests and many other – these will not be discussed here.

A possibly good definintion is: A test „is an assessment intended to measure a test-taker’s knowledge, skill, aptitude, physical fitness, or classification in many other topics” (https://www.merriam-webster.com/dictionary/test).

An On-line test is an on-line assessment that is often called e-assessment, or electronic assessment. It is simply stating that the assessment is utilizing a computer or a computer connected to the network.

There are many aspects of testing, many classifications and areas that we will not deal with. Testing, measurement and evaluation methodology is a whole science. However we may state that:

- Even with paper based tests there are some important technical rules related to question types that we can highlight.

- With the introduction and ever growing use of on-line tests, we have to develope our knowledge related to their role, length, sequence, types, etc. Paper based testing experience is simply not enough in the on-line word.

Closed type (closed-ended) questions

(source: https://fluidsurveys.com/wp-content/uploads/2013/08/openvsclosed-672×300.png)

(source: https://fluidsurveys.com/wp-content/uploads/2013/08/openvsclosed-672×300.png)

From an instructional point of view we split the test item types in two big categories for technical purposes: closed and open type questions. The simple reason is, that the closed type questions (where the answer is pre-defined) may be assessed automatically while open questions (where the answer can be openly constructed) are still assessed manually by teachers (tutors, instructors) although with the development of AI (artificial intelligence) this statement will have to be changed soon. In instructional learning theory the instant feedback is essential for effective learning, therefore in computer based on-line evaluation we concentrate on closed type questions. It is important to mention that we speak about question types that are methodologically different, but are referring technically to the same question type: The odd one out for example is a simple multiple choice question.

Traditional question types with some hints

| Question | Characteristics | Pro | Contra | Hint |

| True/false |

The simplest question type. Best for definitions, or statements. | simple, quick, unambigous | Its easy to guess that if frequently used it may become boring | Use proper statement! Use negative scoring to false answers to minimise guessing Try to make Multiple choice out of 4-5 true-false questions |

| Multiple choice |

Most commonly used type. Almost every closed type test can be converted to Multiple choice. It’s two main sub types are: single answer, multiple answer. |

Well known Most of congitive skills can be tested with it. Easy to feed-back on each distractor. |

Easy to guess if the nubmer of distractors are low. (2-3) Sometimes the good solution is ambigous. |

Use realistic distractors Use same complexity in distractors Avoid grammatical help in distractors Use at least 4-5 distractors |

| Maching |

Relatively well known and powerful question type for a number of cognitive skills. It is also called pairing. | Good for finding pair of words, expressions, definitions. Good for categorsiation. Good for ranking as well. |

At least three possibilites must be offered astwo would allow 50% chance for guessing. The last choice can be logically guessed. Feedback design is complicated. |

Avoid grammatical help in he choices. Try to design more answers than questions. In case of ranking, avoid shuffling. |

| Short answer (fill-in the blank) |

Powerful tool for active remembering. | You can test active knowledge. No ambiguity with distractors. |

Hard to evaluate „almost” good answers: typo, accent, conjungtion, etc. | Use only for the most clear memortiter situations: names, specific expressions, terms. Try to give as explcite guidance in the question as possible. |

| Numerical |

Powerful tool for remembering numbers. A variation of short answer | The same pro-s as in the case of the short answer, but with less uncertainty in evaluation. | There is still some room for misunderstandings: roman numbers, decimal points, written form of numbers. | Try to give as explicite guidance in the question as possible. |

More on this: https://sites.psu.edu/onlineassessment/gather-evidence/,https://monk.webengage.com/creating-awesome-survey-questionnaires/

On-line solutions and hints

Since on-line testing has been introduced, there were many more question types developed, however some of them are only technically different, but methdologically equal to well known question types listed above and there are also completely new type of questions. Let us list sometypes in addition to the main list above:

- Select missing words from a dropdown menu. It is methodologically equal to a multiple choice question. If a text contains more missing words, then it is a combined multiple choice test. Hint: it is hard to develop specific feedback if more words are missing.

- Drag and drop into text. If there is one missing word, then it is equal to a multiple choice, if there are more missing words, with different cohorts of (typically coloured) text, it is a combined multiple choice test. If there are more missing words with one cohort of choices, than it is equal to a Maching question. Hint: Feedback construction needs attention in cases of more than one missing word.

- Drag and drop labels (markers) into image. This is a graphical test, where we show with a marker text (label) the required area.

- Drag and drop images to a background image. It is also a graphical test type, with more possibility of graphical construction.

- Calcuated answer. This type of question is similar to numeric test, but the aswer is calculated by a formula or function, and the answer may be accepted by a pre-set tolerance.

There are however as many question names and types as vendors on the market. There are custom programmed systems as well, where we can meet with different solutions of closed type questions.

Hints for paper based quiz practicioners in on-line environment

- Electronic testing systems may shuffle questions and items, hence there is no need (but not forbidden) to use numbering before the choices.

- There is no need to manually decide which choice position should be the good answer, it is quicker to stick to a local rule, for example, all first choices are the good choices in the question bank.

- Feedback is important in testing, it is highly recommended to author feedback to all test items, even to re-developped old set of quizes.

- For practicioners who are used to feedback but new to complex learning environments, it is recommended to check the machine given feedback patterns to avoid duplication of feedback text.

- LCM Systems (like Moodle) offer a large variety to enter feedback: general feedback, specific feedback after all choices, combined feedback after good, partly good or bad answers. It is recommended to discover the specific roles of and differences between those feedbacks.

- The role and position of test items may be different from the traditional post-test type quizes, therefore it is important to learn about on-line evaluation methodolgy before making decisions about different possibilities.

Typical on-line settings

After having demonstrated that on-line testing can be technically different, there is an area that has never occured in non-digital (print) environment and needs some introduction: the settings of the on-line test. There are several settings in different systems, but in most cases we have settings of test items and settings of the quizes that contain a selection of items.

Item settings

- Question name

- Default mark

- Shuffling the choices

- Numbering the choices (a, A,1, i, I)

- Grade setting (from 100% to -100%)

- Feedback: General, Choice, combined

- Multiple tries: Hints

Quiz settings

- Time: open, close, time limit, time between attempts

- Attempts: one, more, unlimited

- Grading: grade to pass, method (first, last, highest, average)

- Item behaviour: Shuffle mode, behaviour (adaptive, deferred feedack, immediate feedback)

- Review: During the attempt, after the attempt, later in open mode, after close

- Overall feedback: setting of quiz feedback between grade boundries from 0% to 100%

- Selection and weighting of items: specific or random selection from the question bank with possible weighting between different items.

Grading approach

Why do we deal with grading? Because in on-line testing environment we need to use one. But there are many local requirements, regulations and traditions for grading. In addition we create courses in the complex contexts of Higher, Vocational and General education.Well designed learning managmement systems like Moodle or Ilias offer to build different taxonomies to different course environments.

The default grading normally is a 100 point system where it is easy to show learner achievement in percentage. But many environments need to be adapted to a 1-5 (5-1), 1-7, 1-20 grading (scaling) system. There are also more simple grading systems in adult education like: excellent-good-fair-fail or simply: pass-fail.

Some grading systems:

Open type (open-ended) questions

Earlier in this unit we stated that there were two type of questions, open and closed, but we dealt with the commonly used closed type tests only.

Open type questions still have their relevance in many educational environments. The specificity of this question type is that it has to be evaluated manually by a teacher or tutor. This slows down the feedback loop, however the evaluated artifact – an essay or any other uploaded document – may be able to show the student performance better. When open question is part of a quiz, the quiz overall grading can not be finished till the manual marking is done.

Comparison of open and closed type of questions: http://fluidsurveys.com/university/comparing-closed-ended-and-open-ended-questions/

Hints for open type questions in on-line environment

- Use it only for measurement of complex cognitive skill testing.

- Formulate the essay question to get different personal solutions from each learner.

- Give as much guidance for the essay to be written, as possible.

- Use pre-prepared template or grid (a structure) to help learners.

- Prepare for yourself and for your colleagues a sample essay that represents a perfect artifact for grading.

- If the system allows, give evaluation, marking and feedback information with examples of typical mistakes for graders on a hidden area.

More on essay grading:

Voting, polls, synchronous testing

(source: http://cyberthevote.org/wp-content/uploads/2012/02/Cyber-The-Vote-Polling-Place.jpg)

Till now we have dealt with a classic type of on-line testing (e-assessment): the individual one. Individual learning and assessment methods are dominating in education, but collaborative methods and assessment are up-and-coming, so we have to follow their development.

Voting, polls

For most of us, voting and polls are familiar, and we do it frequently in non educational situations. We are also familiar with some aspects of educational quality development where polls about teacher performance, or voting for our representatives in our schools are quite common.

There are on-line tools to help those atcivities, like Doodle, LimeSurvey or Google Forms.

It is not surprising that those tools made it also to learning management systems like Moodle. Let us go one step forward and start thinking about the use of those tools for collaborative learning methods. How can we use voting or polls in on-line collaborative learning:

- With group choice (polling or voting) tools you can help your audience to organise groups themselves.

- Offering a choice to the whole audience or learning groups: to select a topic, to select a working method.

- With polling tools you can ask stimulating questions to the whole audience or working group.

- With polling tools you can test working groups’ achieved level of understanding in their topics, or test their attitude with the actual collaboration.

- With longer surveys (directed to groups) you can organise group assessment. (Quizzes are dedicated to individual learners always)

Synchronous testing

Collaborative on-line assessment can obviously be done simoultaneously, which is called synchronous assessment or testing. We discuss it separately, because the educational situation it is used and the tools are different. Synchronous tesing can be done in classroom situations as well, but also in remote web conferencing situations.

In a classroom there are easy to use tools to organise collaborative learning and collaborative assessment, even in elementary schools. Those applications are designed to help the teacher in organising quiz games in their classes, by using a centrally projected quiz screen. The answers have to be entered by learners on their own smart-phones or tablets. In case of limited resources, groups may be formed in line with the number of equipment availabe in the class. Most commonly used tools are:

Finally let us take one step forward: Could we use synchronous testing tools in remote situations? Why not? In case of videoconferencing, we can share the teacher’s screen with quiz questions, and audience may use their smart-phones to answer. Some vendors like Adobe has already introduced synchronous polling and voting tools in their applications.

![]() On-line test development by Denes Zarka is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

On-line test development by Denes Zarka is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

This project has been funded by Erasmus + programme of the European Union. This web site reflects the views only of the authors, and the Commission cannot be held responsible for any use which may be made of the information contained therein.

This project has been funded by Erasmus + programme of the European Union. This web site reflects the views only of the authors, and the Commission cannot be held responsible for any use which may be made of the information contained therein.